前言

本文基于Android 4.19 Kernel展开讨论,使用的二进制文件来自Android Toybox,可能与真正的Linux系统略有不同

本文探究的主要内容

- 内核空间是如何接收用户传来的优先级的

- 优先级在Linux Kernel中的存储方式

- Top命令中PR、NI两个优先级的含义及其在内核中的对应

前情提要

调度策略概述

Linux内核中存在以下几种任务调度策略

include/uapi/linux/sched.h

/*

* Scheduling policies

*/

#define SCHED_NORMAL 0

#define SCHED_FIFO 1

#define SCHED_RR 2

#define SCHED_BATCH 3

/* SCHED_ISO: reserved but not implemented yet */

#define SCHED_IDLE 5

#define SCHED_DEADLINE 6

其中比较稳定和常用的是SCHED_NORMAL、SCHED_FIFO和SCHED_RRSCHED_NORMAL在用户空间有对应的别名SCHED_OTHER,这个名字被使用在如chrt之类的改变调度策略的命令中

其中,SCHED_OTHER是基于CFS算法的时间片轮转,而SCHED_FIFO和SCHED_RR则是实时(RT)调度策略

实时调度策略的优先级始终高于SCHED_OTHER

此处可以引入RT优先级的概念便于理解(对应chrt中的scheduling_priority、内核中的rt_priority)

(一个任务的rt优先级可以直接使用chrt -p <pid>查看到)

可以认为,RT优先级高的任务可以抢占RT优先级低的任务,此处的抢占是指:高优先级的任务不完成,低优先级任务就无法运行

而SCHED_FIFO和SCHED_RR的RT优先级范围是[1,99],SCHED_OTHER的RT优先级必须为0,因此RT任务可以抢占普通任务

对于RT优先级相同(>0)的任务,SCHED_FIFO采用先进先出的策略,而SCHED_RR则采用平均的时间片轮转

(以上阐述是符合用户侧的状态且易于理解的,但rt优先级在内核侧的工作方式可能并没有想的那么简单,因为它并不会直接决定实时任务能抢占普通任务,而且在内核中实时任务的rt优先级是可以为0的,这和用户空间规定的[1,99]确实不同)

CFS算法

上面提到了:SCHED_OTHER是基于CFS算法的时间片轮转

而CFS算法则是一种在时间片轮转过程中,能针对不同进程的CFS优先级,对时间片长短进行偏置的方式

此处的CFS优先级和上述的RT优先级是完全不一样的概念,RT优先级意味着完全的抢占,而CFS优先级则是建立在进程都能运行的前提下

因此,CFS优先级只对具有相同RT优先级的进程有意义,CFS算法只存在于SCHED_OTHER中,此时所有进程的RT优先级均为0

而这个CFS优先级,它有个真正的名字,叫NICE值

在Linux内核中,可以通过以下这个数组将NICE值转换为权重

const int sched_prio_to_weight[40] = {

/* -20 */ 88761, 71755, 56483, 46273, 36291,

/* -15 */ 29154, 23254, 18705, 14949, 11916,

/* -10 */ 9548, 7620, 6100, 4904, 3906,

/* -5 */ 3121, 2501, 1991, 1586, 1277,

/* 0 */ 1024, 820, 655, 526, 423,

/* 5 */ 335, 272, 215, 172, 137,

/* 10 */ 110, 87, 70, 56, 45,

/* 15 */ 36, 29, 23, 18, 15,

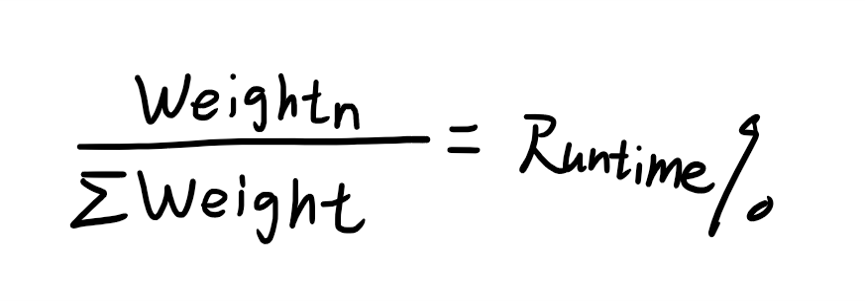

};然后再通过下面这条公式,算出允许的进程负载

上面这堆数值带来的规律是,对于两个进程来说,每提升1的NICE值,会使进程能取得的CPU时间减少10%

优先级的修改

用户空间可以使用chrt修改进程的调度策略和RT优先级renice修改进程的CFS NICE值

内核空间可以使用int sched_setscheduler(struct task_struct *p, int policy, const struct sched_param *param)修改进程调度策略和实时优先级void set_user_nice(struct task_struct *p, long nice)修改进程的NICE值

正文

以下内容才是这篇文章探究的关键点

优先级在内核侧的存储

在Linux关键的PCB中,存在以下内容

include/linux/sched.h

struct task_struct {

......

int prio;

int static_prio;

int normal_prio;

unsigned int rt_priority;

unsigned int policy;

......

};其中包含了各种优先级和调度策略,接下来就逐一探究其存储了什么内容

NICE值

直接从设置NICE值的函数入手,看看东西被存到了哪里

kernel/sched/core.c

void set_user_nice(struct task_struct *p, long nice)

{

bool queued, running;

int old_prio, delta;

struct rq_flags rf;

struct rq *rq;

if (task_nice(p) == nice || nice < MIN_NICE || nice > MAX_NICE)

return;

/*

* We have to be careful, if called from sys_setpriority(),

* the task might be in the middle of scheduling on another CPU.

*/

rq = task_rq_lock(p, &rf);

update_rq_clock(rq);

/*

* The RT priorities are set via sched_setscheduler(), but we still

* allow the 'normal' nice value to be set - but as expected

* it wont have any effect on scheduling until the task is

* SCHED_DEADLINE, SCHED_FIFO or SCHED_RR:

*/

if (task_has_dl_policy(p) || task_has_rt_policy(p)) {

p->static_prio = NICE_TO_PRIO(nice);

goto out_unlock;

}

queued = task_on_rq_queued(p);

running = task_current(rq, p);

if (queued)

dequeue_task(rq, p, DEQUEUE_SAVE | DEQUEUE_NOCLOCK);

if (running)

put_prev_task(rq, p);

p->static_prio = NICE_TO_PRIO(nice);

set_load_weight(p, true);

old_prio = p->prio;

p->prio = effective_prio(p);

delta = p->prio - old_prio;

if (queued) {

enqueue_task(rq, p, ENQUEUE_RESTORE | ENQUEUE_NOCLOCK);

/*

* If the task increased its priority or is running and

* lowered its priority, then reschedule its CPU:

*/

if (delta < 0 || (delta > 0 && task_running(rq, p)))

resched_curr(rq);

}

if (running)

set_curr_task(rq, p);

out_unlock:

task_rq_unlock(rq, p, &rf);

}

EXPORT_SYMBOL(set_user_nice);可以看到,无论是RT进程还是普通进程,最终nice值都通过了一个NICE_TO_PRIO的宏,存储到了static_prio中

而这个宏是长这样的

include/linux/sched/prio.h

#define MAX_NICE 19

#define MIN_NICE -20

#define NICE_WIDTH (MAX_NICE - MIN_NICE + 1)

/*

* Priority of a process goes from 0..MAX_PRIO-1, valid RT

* priority is 0..MAX_RT_PRIO-1, and SCHED_NORMAL/SCHED_BATCH

* tasks are in the range MAX_RT_PRIO..MAX_PRIO-1. Priority

* values are inverted: lower p->prio value means higher priority.

*

* The MAX_USER_RT_PRIO value allows the actual maximum

* RT priority to be separate from the value exported to

* user-space. This allows kernel threads to set their

* priority to a value higher than any user task. Note:

* MAX_RT_PRIO must not be smaller than MAX_USER_RT_PRIO.

*/

#define MAX_USER_RT_PRIO 100

#define MAX_RT_PRIO MAX_USER_RT_PRIO

#define MAX_PRIO (MAX_RT_PRIO + NICE_WIDTH)

#define DEFAULT_PRIO (MAX_RT_PRIO + NICE_WIDTH / 2)

/*

* Convert user-nice values [ -20 ... 0 ... 19 ]

* to static priority [ MAX_RT_PRIO..MAX_PRIO-1 ],

* and back.

*/

#define NICE_TO_PRIO(nice) ((nice) + DEFAULT_PRIO)翻译一下就是,static_prio = 120 + nice

此时我们已经明白,对于任何任务,即使是NICE值无效的RT任务,其NICE值也会在换算后被存储到static_prio中

rt_priority

static void __setscheduler_params(struct task_struct *p,

const struct sched_attr *attr)

{

int policy = attr->sched_policy;

if (policy == SETPARAM_POLICY)

policy = p->policy;

/* Replace SCHED_FIFO with SCHED_RR to reduce latency */

p->policy = policy == SCHED_FIFO ? SCHED_RR : policy;

if (dl_policy(policy))

__setparam_dl(p, attr);

else if (fair_policy(policy))

p->static_prio = NICE_TO_PRIO(attr->sched_nice);

/*

* __sched_setscheduler() ensures attr->sched_priority == 0 when

* !rt_policy. Always setting this ensures that things like

* getparam()/getattr() don't report silly values for !rt tasks.

*/

p->rt_priority = attr->sched_priority;

p->normal_prio = normal_prio(p);

set_load_weight(p, true);

}rt_priority就是来自于sched_setscheduler中传递的param中的sched_priority,而这又对应到了syscall使用的sys_sched_setscheduler,也就是说,上方的两条设置rt优先级的命令,都将rt优先级赋值给了rt_priority,其值与赋值的值相等

normal_prio

normal不是普通,而是normalize的缩写

这个东西被称为归一化的优先级,也就是说,这个东西存在的意义主要在于,使得不同调度策略的任务在优先级上能够方便的比较

而它,其实就是通过一种算法,把RT优先级和NICE值两者融合在了一起,为所有任务呈现一个通用的优先级

include/linux/sched/prio.h

#define MAX_NICE 19

#define MIN_NICE -20

#define NICE_WIDTH (MAX_NICE - MIN_NICE + 1)

/*

* Priority of a process goes from 0..MAX_PRIO-1, valid RT

* priority is 0..MAX_RT_PRIO-1, and SCHED_NORMAL/SCHED_BATCH

* tasks are in the range MAX_RT_PRIO..MAX_PRIO-1. Priority

* values are inverted: lower p->prio value means higher priority.

*

* The MAX_USER_RT_PRIO value allows the actual maximum

* RT priority to be separate from the value exported to

* user-space. This allows kernel threads to set their

* priority to a value higher than any user task. Note:

* MAX_RT_PRIO must not be smaller than MAX_USER_RT_PRIO.

*/

#define MAX_USER_RT_PRIO 100

#define MAX_RT_PRIO MAX_USER_RT_PRIO

#define MAX_PRIO (MAX_RT_PRIO + NICE_WIDTH)

#define DEFAULT_PRIO (MAX_RT_PRIO + NICE_WIDTH / 2)

/*

* Convert user-nice values [ -20 ... 0 ... 19 ]

* to static priority [ MAX_RT_PRIO..MAX_PRIO-1 ],

* and back.

*/

#define NICE_TO_PRIO(nice) ((nice) + DEFAULT_PRIO)

include/linux/sched/deadline.h

/*

* SCHED_DEADLINE tasks has negative priorities, reflecting

* the fact that any of them has higher prio than RT and

* NORMAL/BATCH tasks.

*/

#define MAX_DL_PRIO 0

kernel/sched/core.c

/*

* __normal_prio - return the priority that is based on the static prio

*/

static inline int __normal_prio(struct task_struct *p)

{

return p->static_prio;

}

/*

* Calculate the expected normal priority: i.e. priority

* without taking RT-inheritance into account. Might be

* boosted by interactivity modifiers. Changes upon fork,

* setprio syscalls, and whenever the interactivity

* estimator recalculates.

*/

static inline int normal_prio(struct task_struct *p)

{

int prio;

if (task_has_dl_policy(p))

prio = MAX_DL_PRIO-1;

else if (task_has_rt_policy(p))

prio = MAX_RT_PRIO-1 - p->rt_priority;

else

prio = __normal_prio(p);

return prio;

}从上面这段代码中可以看到,对于SCHED_OTHER类型的任务,其normal_prio就是static_prio

而对于RT类型的任务,其normal_prio为99-rt_priority

在于RT任务的优先级范围[0,99]对照之后

我们可以得出下方的结论

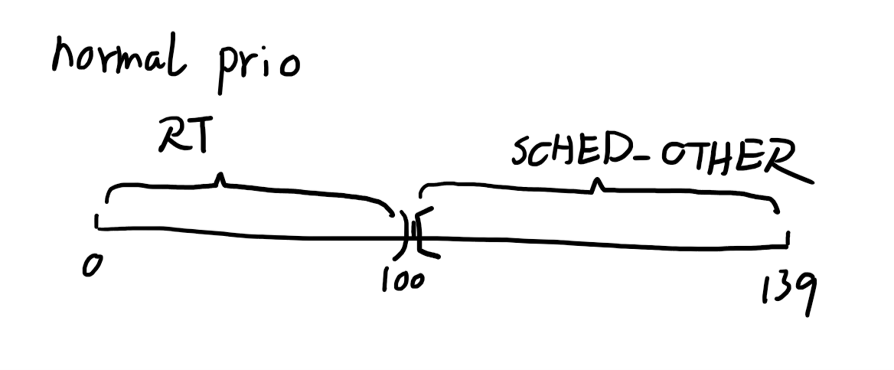

normal_prio的分布是连续的,其中区间[0,99]属于RT任务,大小由RT优先级决定

[100,139]属于普通任务,大小由NICE值决定

嗯?有没有发现奇怪的地方,为什么99是闭区间呢?RT任务的RT优先级的范围不是从1开始的么,这我在上面有提到过,内核中任务的RT优先级是可以被设置为0的,即使用户空间并不允许这么做

prio

别忘了task_struct里面还有个就叫做prio的东西

这个东西有另一个叫法:effective_prio 有效优先级

这个东西似乎是RT任务调度优先的真正参考依据,而不是rt_priority

rt.c:274: return rq->rt.highest_prio.curr > prev->prio &&

rt.c:386: plist_node_init(&p->pushable_tasks, p->prio);

rt.c:390: if (p->prio < rq->rt.highest_prio.next)

rt.c:391: rq->rt.highest_prio.next = p->prio;

rt.c:402: rq->rt.highest_prio.next = p->prio;

rt.c:511: if (rt_rq->highest_prio.curr < curr->prio)

rt.c:546: return p->prio != p->normal_prio;

rt.c:914: return rt_task_of(rt_se)->prio;

rt.c:1542: curr->prio <= p->prio))) {

rt.c:1553: p->prio < cpu_rq(target)->rt.highest_prio.curr))

rt.c:1596: if (p->prio < rq->curr->prio) {

rt.c:1614: if (p->prio == rq->curr->prio && !test_tsk_need_resched(rq->curr))

rt.c:1960: if (lowest_rq->rt.highest_prio.curr <= task->prio) {

rt.c:1991: if (lowest_rq->rt.highest_prio.curr > task->prio)

rt.c:2051: if (unlikely(next_task->prio < rq->curr->prio)) {

rt.c:2351: if (p && (p->prio < this_rq->rt.highest_prio.curr)) {

rt.c:2363: if (p->prio < src_rq->curr->prio)

rt.c:2399: rq->curr->prio <= p->prio))

rt.c:2475: if (p->prio < rq->curr->prio && cpu_online(cpu_of(rq)))

rt.c:2496: if (oldprio < p->prio)

rt.c:2503: if (p->prio > rq->rt.highest_prio.curr)

rt.c:2507: if (oldprio < p->prio)

rt.c:2516: if (p->prio < rq->curr->prio)据说是因为一些普通任务在某些情况下也会被boost成RT类型的任务?

/*

* Calculate the current priority, i.e. the priority

* taken into account by the scheduler. This value might

* be boosted by RT tasks, or might be boosted by

* interactivity modifiers. Will be RT if the task got

* RT-boosted. If not then it returns p->normal_prio.

*/

static int effective_prio(struct task_struct *p)

{

p->normal_prio = normal_prio(p);

/*

* If we are RT tasks or we were boosted to RT priority,

* keep the priority unchanged. Otherwise, update priority

* to the normal priority:

*/

if (!rt_prio(p->prio))

return p->normal_prio;

return p->prio;

}不过按照这东西的生成方法来看,大多数情况下,它都是和normal_prio保持一致的

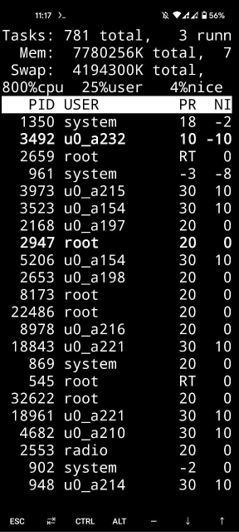

TOP命令中的优先级

NI很明显是NICE值,那PR又是什么,看这个范围,好像啥也不是,有时甚至还会出现字符串RT?

此处忽略实验过程,直接说结论

NI = normal_prio - 100

不过也有可能是prio - 100,不过这俩东西基本都是相等的,我也不知道到底是哪个

当NI<=-10时,TOP中显示为RT,并不是网上有些说法中所谓的最优先才显示为RT

结

至于这些优先级在内核中具体被使用的方式,有待进一步研究